Legal Internet Use by Terrorists

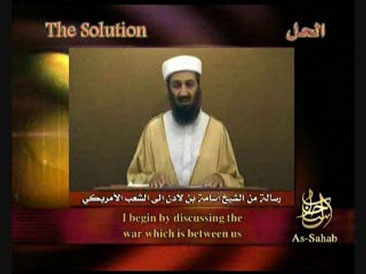

IntroductionOne of the greatest features of the internet is that it is a powerful tool available to everyone. However, when such a tool falls into the wrong hands, there is little that regulators can do without threatening the very features of the internet that give it value. This short essay considers this inherent dilemma of choosing between a free and open internet and one that can be harnessed to further evil purposes. I’m not talking about actual attacks via information networks (i.e. cyberterrorism), as such behavior is simply destructive regardless of who is doing it. Where the question really gets interesting is where regulation that would require some kind of discrimination—for instance, allowing online community organization efforts by the Obama campaign but not by Al Qaeda (see chart below). The ultimate question I seek to answer is whether or not peaceful and otherwise legal uses of the internet should be regulated based on the user’s purpose. I believe that unless there is violence or other extreme circumstances involved, it should remain as open and powerful a tool as possible.

Features of the InternetThe following list briefly considers (a) the internet’s value to both good and evil organizations and (b) what may be lost or significantly altered by counterterrorist regulation efforts.

Legal Uses of the InternetBelow is a breakdown of some of the legal uses of the internet that can be either devastating or beneficial depending on its user. For comparison, I discuss how terrorists use the internet to further their violent goals in largely the same manner that grassroots election campaigns further democratic principles during elections.

Discussion and ConclusionCounterterrorism in many ways is simply a line-drawing exercise—i.e. what we are willing to sacrifice in order to preserve our safety. For instance, while we are not ready to ban air travel completely, we are willing to submit ourselves to restrictions on what we can carry onto a plane. In a recent microcosm of the issue, Senator Joe Lieberman demanded that YouTube remove all videos from identified terrorist organizations. YouTube responded by only removing those that were violent enough to violate its own community standards while defending the organizations’ right to post legal nonviolent material as well as the total benefit to society from a diverse range of views. I tend to agree with YouTube’s approach, and believe that content should only be regulated where there is violence, or the imminent threat of some kind of violence in relation to that content. First, when it comes to criminal and terrorist activity, we should regulate the violence they may use to achieve their goals, rather than their beliefs alone. We do not fear people for what they believe or desire; we fear what they might do to us to achieve their goals. Second, a line drawn too far would simply do more harm than good for national security. Regardless of intent, prohibiting even peaceful communications amounts to outright censorship, which would only strengthen the resolve and hatred of terrorists and their potential supporters. Also, the automated and decentralized nature of the internet would turn most efforts at regulation into a futile game of Whac-A-Mole, with new sites popping up every time another is quashed. Any gain in security achieved by such measures would likely be far outweighed by the sympathy and support terrorists would receive as a result of such censorship. Finally, I believe the freedom and neutrality that the internet represents is something worth protecting. In many ways it is a technological embodiment of democratic and free-speech ideals, right now allowing me to publish my uncensored thoughts for the whole world to see, regardless of who I am and what I believe. Also, technologically, any filtering methods that successfully block all content from certain would have to be extremely invasive and likely overbroad (given the “Whac-A-Mole” problem stated above). And at the end of the day, the internet would be subject to the discretion of an unelected and largely unregulated body of decisionmakers. National security is and should remain a top priority of our nation. However, any line drawn beyond violent content or imminent attack would not only be futile, but actually detrimental to both our security and the principles we’ve fought for since the inception of our country.

[1] - See Bobbitt, Philip, Terror and Consent: The Wars for the Twenty-First Century (New York: Alfed A. Knopf, 2008), Pg. 56 -- StevenHwang - 18 Nov 2008

Steven, I'm a bit confused by where you draw the line. You seem to be saying that violent footage should be taken down, but you're also arguing that filtering would be overbroad and subject to the abuse of an unregulated body. How do you reconcile these two statements? Even if you're only filtering for violent content, you still face the same general ills of filtration. Furthermore, how does a filter distinguish between CNN footage and an Al Qaeda beheading distributed for fear-mongering purposes? And in that case, should there necessarily be any filtration at all? Isn't that a fact for the world to see and scholars and politicians to cite? Or maybe you want this line to only be applicable to content aggregators like YouTube? , but not the Internet at large? That would clear up the logical inconsistency in your statements, but I'm not sure that's a proper solution, either. -- KateVershov - 05 Dec 2008

Your'e right, I don't think I was very clear on the line I drew. I think the line should be drawn where the content actually causes or creates imminent physical violence (as opposed to simply depicting it). It would be very difficult to meet this standard--it would have to be something on the magnitude of outright instruction. For instance, if a terrorist organization were to post a call for its followers to riot at a particular time on a particular street or to assassinate a certain official, we should be able to take that down. I would take this even farther to say that even if the message were hidden--e.g. if the content was ostensibly innocuous, but the government had really good reason to believe it was some kind of "trigger" for a coordinated attack, we should also be able to take that down. On the other hand, if they say that America is bad or simply depict violent actions, I might dislike them, but I do not think that it should be taken down. YouTube? 's own community standard is much stricter than mine, but I agree with their filtering based on CONTENT rather than by USER. I'll clear this up in my rewrite. Thanks for the comment! -- StevenHwang - 06 Dec 2008 |

||||||||||||||||